Hierarchical Clustering to Perform Product Segmentation

Product Segmentation Case Study

Context¶

When you think of sneakers for a trip, the importance of good footwear cannot be discarded, and the obvious brands that come to mind are Adidas and Nike. Adidas vs Nike is a constant debate as the two giants in the apparel market, with a large market cap and market share, battle it out to come on top. As a newly hired Data Scientist in a market research company, you have been given the task of extracting insights from the data of men’s and women’s shoes, and grouping products together to identify similarities and differences between the product range of these renowned brands.

Objective

To perform an exploratory data analysis and cluster the products based on various factors

Key Questions

- Which variables are most important for clustering?

- How each cluster is different from the others?

- What are the business recommendations?

Data Description

The dataset consists of 3268 products from Nike and Adidas with features of information including their ratings, discount, sales price, listed price, product name, and the number of reviews.

- Product Name: Name of the product

- Product ID: ID of the product

- Listing Price: Listed price of the product

- Sale Price: Sale price of the product

- Discount: Percentage of discount on the product

- Brand: Brand of the product

- Rating: Rating of the product

- Reviews: Number of reviews for the product

Let’s start coding!

Importing necessary libraries

In [1]:

# this will help in making the Python code more structured automatically (good coding practice)

%load_ext nb_black

# Libraries to help with reading and manipulating data

import numpy as np

import pandas as pd

# Libraries to help with data visualization

import matplotlib.pyplot as plt

import seaborn as sns

# Removes the limit for the number of displayed columns

pd.set_option("display.max_columns", None)

# Sets the limit for the number of displayed rows

pd.set_option("display.max_rows", 200)

# to scale the data using z-score

from sklearn.preprocessing import StandardScaler

# to compute distances

from scipy.spatial.distance import pdist

# to perform hierarchical clustering, compute cophenetic correlation, and create dendrograms

from sklearn.cluster import AgglomerativeClustering

from scipy.cluster.hierarchy import dendrogram, linkage, cophenet

In [2]:

# loading the dataset

data = pd.read_csv("data_add_nik.csv")

In [3]:

data.shape

Out[3]:

(3268, 8)

- The dataset has 3268 rows and 8 columns

In [4]:

# viewing a random sample of the dataset data.sample(n=10, random_state=1)

Out[4]:

| Product Name | Product ID | Listing Price | Sale Price | Discount | Brand | Rating | Reviews | |

|---|---|---|---|---|---|---|---|---|

| 255 | Women’s adidas Originals POD-S3.1 Shoes | CG6188 | 13999 | 6999 | 50 | Adidas ORIGINALS | 3.3 | 8 |

| 1551 | Men’s adidas Originals Superstar Pure Shoes | FV3013 | 11999 | 11999 | 0 | Adidas ORIGINALS | 3.9 | 10 |

| 1352 | Men’s adidas Originals Superstar Shoes | FV2806 | 7999 | 7999 | 0 | Adidas ORIGINALS | 4.4 | 42 |

| 1060 | Men’s adidas Football Nemeziz 19.3 Indoor Shoes | F34411 | 5999 | 3599 | 40 | Adidas SPORT PERFORMANCE | 4.5 | 75 |

| 808 | Men’s adidas Sport Inspired Court 80s Shoes | EE9676 | 5999 | 3599 | 40 | Adidas CORE / NEO | 4.5 | 55 |

| 836 | Men’s adidas Running Stargon 1.0 Shoes | CM4935 | 4799 | 3839 | 20 | Adidas CORE / NEO | 3.5 | 21 |

| 2107 | Men’s adidas Originals Yung-96 Chasm Shoes | EE7238 | 7999 | 4799 | 40 | Adidas ORIGINALS | 3.0 | 62 |

| 3002 | Nike SB Air Max Stefan Janoski 2 | AQ7477-009 | 0 | 9995 | 0 | Nike | 2.6 | 11 |

| 2329 | Men’s adidas Originals Rivalry Low Shoes | FV4287 | 10999 | 10999 | 0 | Adidas ORIGINALS | 2.8 | 8 |

| 602 | Men’s adidas Sport Inspired Lite Racer RBN Shoes | F36642 | 5599 | 3919 | 30 | Adidas CORE / NEO | 3.0 | 56 |

In [5]:

# copying the data to another variable to avoid any changes to original data df = data.copy()

In [6]:

# fixing column names

df.columns = [c.replace(" ", "_") for c in df.columns]

In [7]:

# let's look at the structure of the data df.info()

<class 'pandas.core.frame.DataFrame'> RangeIndex: 3268 entries, 0 to 3267 Data columns (total 8 columns): # Column Non-Null Count Dtype --- ------ -------------- ----- 0 Product_Name 3268 non-null object 1 Product_ID 3268 non-null object 2 Listing_Price 3268 non-null int64 3 Sale_Price 3268 non-null int64 4 Discount 3268 non-null int64 5 Brand 3268 non-null object 6 Rating 3268 non-null float64 7 Reviews 3268 non-null int64 dtypes: float64(1), int64(4), object(3) memory usage: 204.4+ KB

We won’t need Product_ID for analysis, so let’s drop this column.

In [8]:

df.drop("Product_ID", axis=1, inplace=True)

In [9]:

# let's check for duplicate observations df.duplicated().sum()

Out[9]:

117

- There are 117 duplicate observations. We will remove them from the data.

In [10]:

df = df[(~df.duplicated())].copy()

Let’s take a look at the summary of the data

In [11]:

df.describe()

Out[11]:

| Listing_Price | Sale_Price | Discount | Rating | Reviews | |

|---|---|---|---|---|---|

| count | 3151.000000 | 3151.000000 | 3151.000000 | 3151.000000 | 3151.000000 |

| mean | 7045.960330 | 5983.166931 | 27.860997 | 3.285687 | 41.891146 |

| std | 4652.089511 | 4173.708897 | 22.442753 | 1.371611 | 31.283464 |

| min | 0.000000 | 449.000000 | 0.000000 | 0.000000 | 0.000000 |

| 25% | 4599.000000 | 2999.000000 | 0.000000 | 2.600000 | 12.000000 |

| 50% | 5999.000000 | 4599.000000 | 40.000000 | 3.600000 | 40.000000 |

| 75% | 8999.000000 | 7799.000000 | 50.000000 | 4.400000 | 69.000000 |

| max | 29999.000000 | 36500.000000 | 60.000000 | 5.000000 | 223.000000 |

Observations

- 0 in the listing price indicates missing values.

- The average listing price is 7046.

- The average sale price is 5983.

- The average discount is 28%.

- The average rating is 3.3.

- The average number of reviews is 42.

In [12]:

# let's check how many products have listing price 0 (df.Listing_Price == 0).sum()

Out[12]:

336

In [13]:

# let's check the products which have listing price 0 df[(df.Listing_Price == 0)]

Out[13]:

| Product_Name | Listing_Price | Sale_Price | Discount | Brand | Rating | Reviews | |

|---|---|---|---|---|---|---|---|

| 2625 | Nike Air Force 1 ’07 Essential | 0 | 7495 | 0 | Nike | 0.0 | 0 |

| 2626 | Nike Air Force 1 ’07 | 0 | 7495 | 0 | Nike | 0.0 | 0 |

| 2627 | Nike Air Force 1 Sage Low LX | 0 | 9995 | 0 | Nike | 0.0 | 0 |

| 2628 | Nike Air Max Dia SE | 0 | 9995 | 0 | Nike | 0.0 | 0 |

| 2629 | Nike Air Max Verona | 0 | 9995 | 0 | Nike | 0.0 | 0 |

| … | … | … | … | … | … | … | … |

| 3254 | Nike Mercurial Vapor 13 Club MG | 0 | 4995 | 0 | Nike | 0.0 | 0 |

| 3257 | Air Jordan 5 Retro | 0 | 15995 | 0 | Nike | 3.3 | 3 |

| 3260 | Nike Tiempo Legend 8 Academy TF | 0 | 6495 | 0 | Nike | 0.0 | 0 |

| 3262 | Nike React Metcon AMP | 0 | 13995 | 0 | Nike | 3.0 | 1 |

| 3266 | Nike Air Max 98 | 0 | 16995 | 0 | Nike | 4.0 | 4 |

336 rows × 7 columns

In [14]:

df[(df.Listing_Price == 0)].describe()

Out[14]:

| Listing_Price | Sale_Price | Discount | Rating | Reviews | |

|---|---|---|---|---|---|

| count | 336.0 | 336.000000 | 336.0 | 336.000000 | 336.000000 |

| mean | 0.0 | 11203.050595 | 0.0 | 2.797619 | 8.261905 |

| std | 0.0 | 4623.825788 | 0.0 | 2.150445 | 19.708393 |

| min | 0.0 | 1595.000000 | 0.0 | 0.000000 | 0.000000 |

| 25% | 0.0 | 7995.000000 | 0.0 | 0.000000 | 0.000000 |

| 50% | 0.0 | 10995.000000 | 0.0 | 3.950000 | 1.000000 |

| 75% | 0.0 | 13995.000000 | 0.0 | 4.700000 | 6.000000 |

| max | 0.0 | 36500.000000 | 0.0 | 5.000000 | 223.000000 |

- There are 336 observations that have missing values in the listing price column

- We see that the discount for the products with listing price 0 is 0.

- So, we will replace the listing price with the corresponding sale price for those observations.

In [15]:

df.loc[(df.Listing_Price == 0), ["Listing_Price"]] = df.loc[

(df.Listing_Price == 0), ["Sale_Price"]

].values

In [16]:

df.Listing_Price.describe()

Out[16]:

count 3151.000000 mean 8240.573151 std 4363.018245 min 899.000000 25% 4999.000000 50% 7599.000000 75% 10995.000000 max 36500.000000 Name: Listing_Price, dtype: float64

In [17]:

# checking missing values df.isna().sum()

Out[17]:

Product_Name 0 Listing_Price 0 Sale_Price 0 Discount 0 Brand 0 Rating 0 Reviews 0 dtype: int64

- There are no missing values in the data.

EDA

In [18]:

# function to plot a boxplot and a histogram along the same scale.

def histogram_boxplot(data, feature, figsize=(12, 7), kde=False, bins=None):

"""

Boxplot and histogram combined

data: dataframe

feature: dataframe column

figsize: size of figure (default (12,7))

kde: whether to the show density curve (default False)

bins: number of bins for histogram (default None)

"""

f2, (ax_box2, ax_hist2) = plt.subplots(

nrows=2, # Number of rows of the subplot grid= 2

sharex=True, # x-axis will be shared among all subplots

gridspec_kw={"height_ratios": (0.25, 0.75)},

figsize=figsize,

) # creating the 2 subplots

sns.boxplot(

data=data, x=feature, ax=ax_box2, showmeans=True, color="violet"

) # boxplot will be created and a star will indicate the mean value of the column

sns.histplot(

data=data, x=feature, kde=kde, ax=ax_hist2, bins=bins, palette="winter"

) if bins else sns.histplot(

data=data, x=feature, kde=kde, ax=ax_hist2

) # For histogram

ax_hist2.axvline(

data[feature].mean(), color="green", linestyle="--"

) # Add mean to the histogram

ax_hist2.axvline(

data[feature].median(), color="black", linestyle="-"

) # Add median to the histogram

In [19]:

# selecting numerical columns

num_col = df.select_dtypes(include=np.number).columns.tolist()

for item in num_col:

histogram_boxplot(df, item)

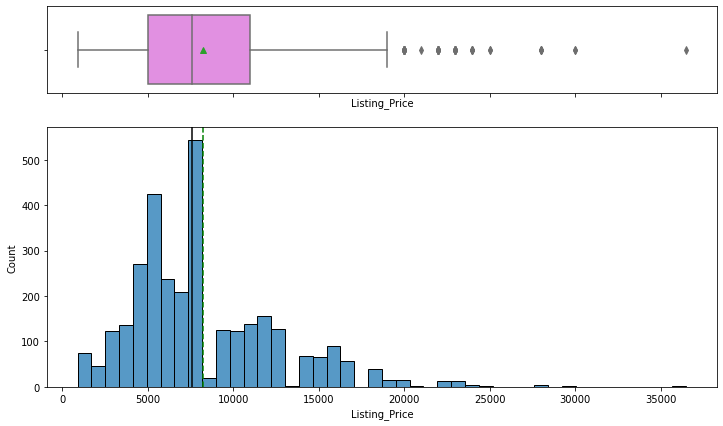

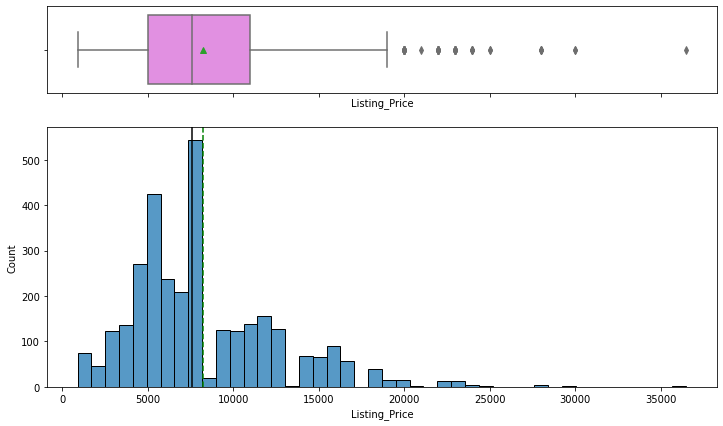

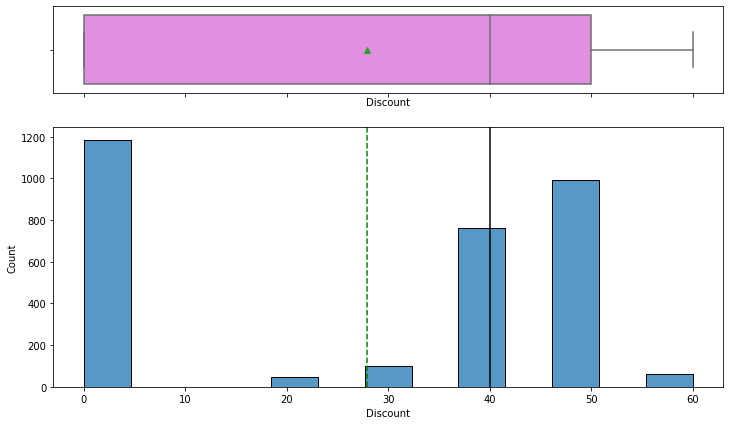

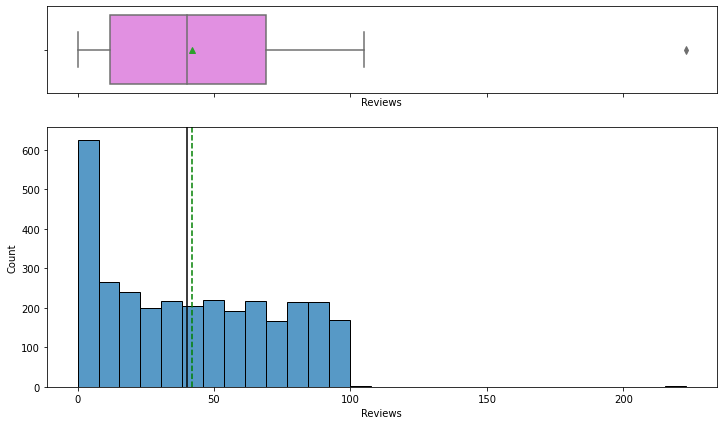

Observations

- Listing price and sale price have right-skewed distributions with upper outliers, which indicates the presence of very expensive products.

- The maximum discount given is 60%.

- Rating is left-skewed and most of the ratings are between 2.5 and 4.5.

- The number of reviews is between 1 and 100, with an outlier value above 200.

In [20]:

fig, axes = plt.subplots(3, 2, figsize=(20, 15))

fig.suptitle("CDF plot of numerical variables", fontsize=20)

counter = 0

for ii in range(3):

sns.ecdfplot(ax=axes[ii][0], x=df[num_col[counter]])

counter = counter + 1

if counter != 5:

sns.ecdfplot(ax=axes[ii][1], x=df[num_col[counter]])

counter = counter + 1

else:

pass

fig.tight_layout(pad=2.0)

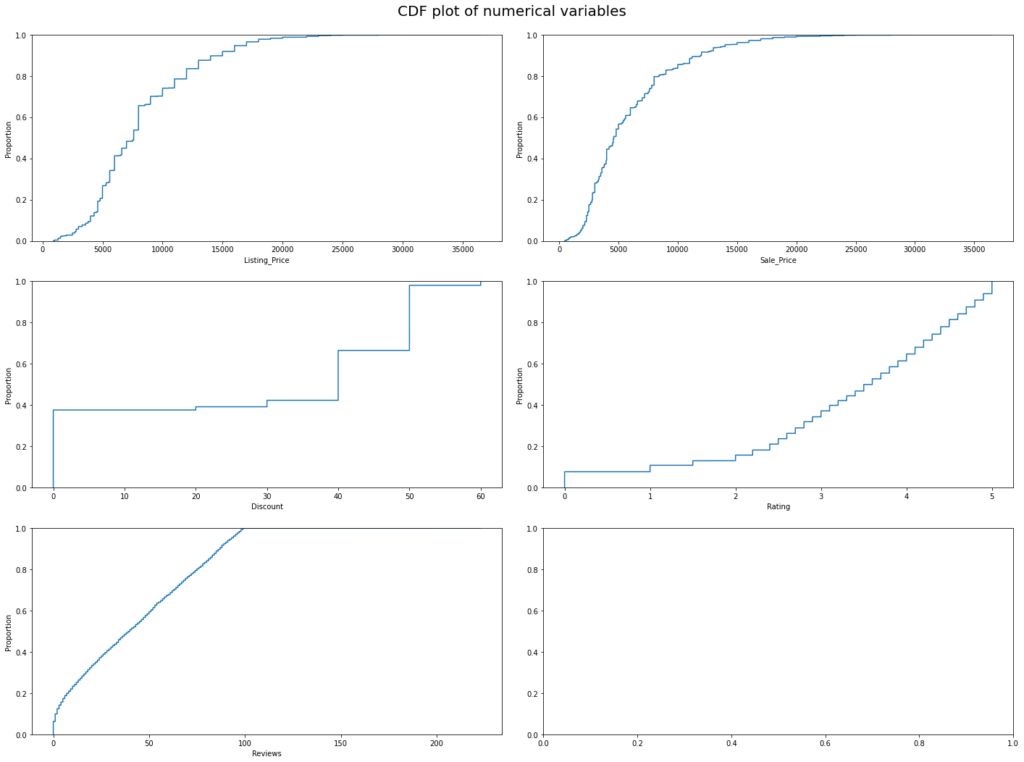

Observations

- 90% of the products have listing prices less than 15000.

- 95% of the product have a sale price of less than 15000.

- 80% of the products have at least 50% discount or less than 50%.

- 50% off the products have a rating of 3.5 or less than 3.5.

- Almost all products have 100 or fewer reviews.

In [21]:

# function to create labeled barplots

def labeled_barplot(data, feature, perc=False, n=None):

"""

Barplot with percentage at the top

data: dataframe

feature: dataframe column

perc: whether to display percentages instead of count (default is False)

n: displays the top n category levels (default is None, i.e., display all levels)

"""

total = len(data[feature]) # length of the column

count = data[feature].nunique()

if n is None:

plt.figure(figsize=(count + 1, 5))

else:

plt.figure(figsize=(n + 1, 5))

plt.xticks(rotation=90, fontsize=15)

ax = sns.countplot(

data=data,

x=feature,

palette="Paired",

order=data[feature].value_counts().index[:n].sort_values(),

)

for p in ax.patches:

if perc:

label = "{:.1f}%".format(

100 * p.get_height() / total

) # percentage of each class of the category

else:

label = p.get_height() # count of each level of the category

x = p.get_x() + p.get_width() / 2 # width of the plot

y = p.get_height() # height of the plot

ax.annotate(

label,

(x, y),

ha="center",

va="center",

size=12,

xytext=(0, 5),

textcoords="offset points",

) # annotate the percentage

plt.show() # show the plot

In [22]:

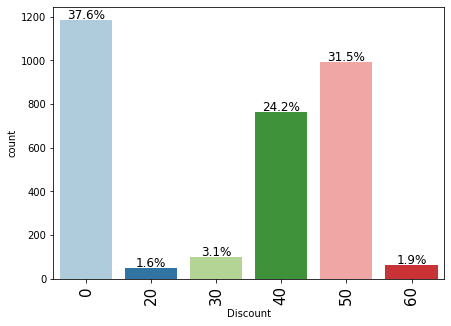

# let's explore discounts further labeled_barplot(df, "Discount", perc=True)

Observations

- 37.6% of products did not have any discounts.

- More than 50% of the products have 40-50% discount.

Bivariate Analysis

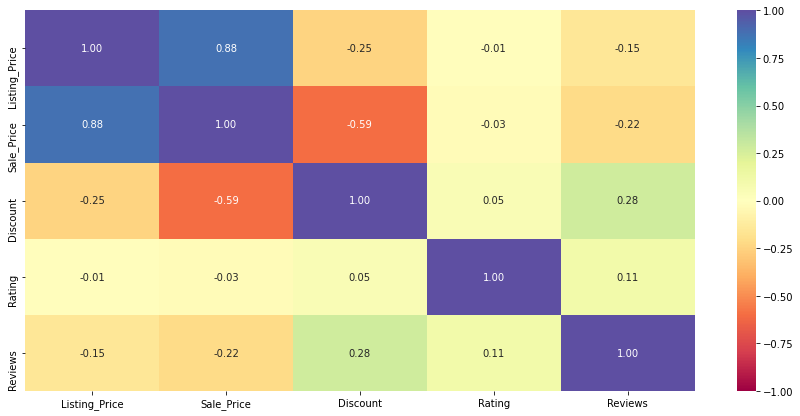

Let’s check for correlations.

In [23]:

plt.figure(figsize=(15, 7)) sns.heatmap(df[num_col].corr(), annot=True, vmin=-1, vmax=1, fmt=".2f", cmap="Spectral") plt.show()

Observations

- The sale price and the listing price are highly positively correlated, which is obvious.

- Discount is somewhat negatively correlated with listing price or sale price, which indicates that expensive products either have fewer discounts or no discounts at all.

In [24]:

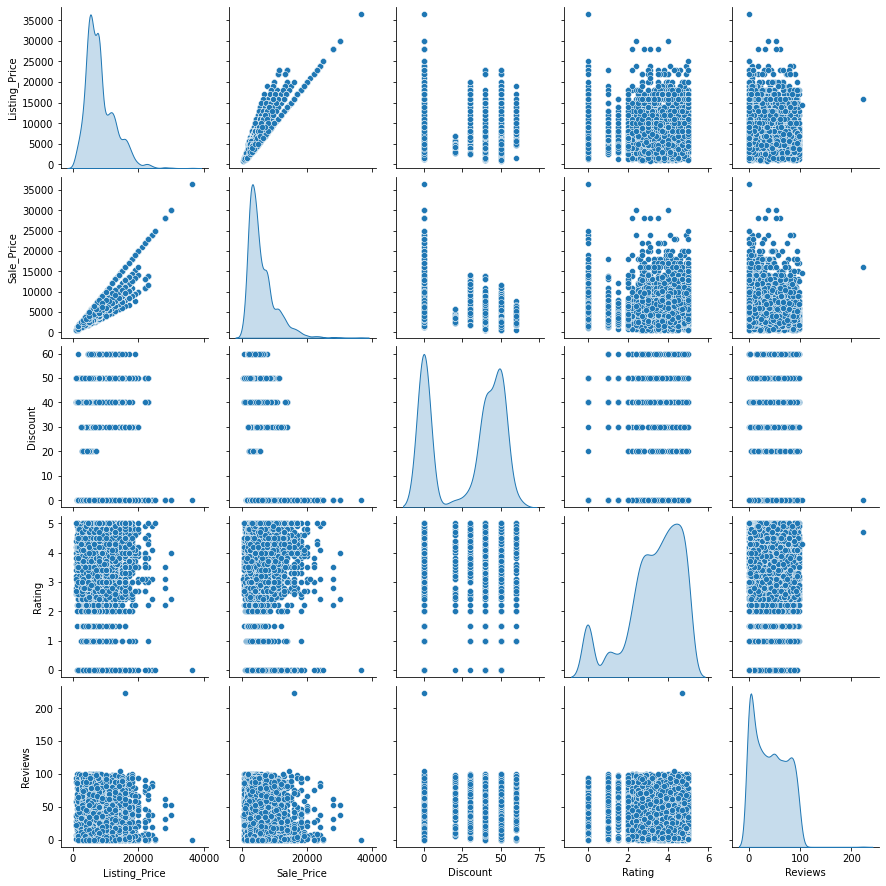

sns.pairplot(data=df[num_col], diag_kind="kde") plt.show()

Observations

- 50-60% discounts are mostly given to products whose listing price is 10,000 or less.

- Many products have no discount.

- As seen before, most of the products have 100 or fewer reviews.

Data Preprocessing

In [25]:

# variables used for clustering num_col

Out[25]:

['Listing_Price', 'Sale_Price', 'Discount', 'Rating', 'Reviews']

In [26]:

# scaling the dataset before clustering scaler = StandardScaler() subset = df[num_col].copy() subset_scaled = scaler.fit_transform(subset)

In [27]:

# creating a dataframe of the scaled columns subset_scaled_df = pd.DataFrame(subset_scaled, columns=subset.columns)

Hierarchical Clustering

In [28]:

# list of distance metrics

distance_metrics = ["euclidean", "chebyshev", "mahalanobis", "cityblock"]

# list of linkage methods

linkage_methods = ["single", "complete", "average", "weighted"]

high_cophenet_corr = 0

high_dm_lm = [0, 0]

for dm in distance_metrics:

for lm in linkage_methods:

Z = linkage(subset_scaled_df, metric=dm, method=lm)

c, coph_dists = cophenet(Z, pdist(subset_scaled_df))

print(

"Cophenetic correlation for {} distance and {} linkage is {}.".format(

dm.capitalize(), lm, c

)

)

if high_cophenet_corr < c:

high_cophenet_corr = c

high_dm_lm[0] = dm

high_dm_lm[1] = lm

Cophenetic correlation for Euclidean distance and single linkage is 0.6391818886918261. Cophenetic correlation for Euclidean distance and complete linkage is 0.7162924652763346. Cophenetic correlation for Euclidean distance and average linkage is 0.7664519011885238. Cophenetic correlation for Euclidean distance and weighted linkage is 0.6047194146480928. Cophenetic correlation for Chebyshev distance and single linkage is 0.5854266041780847. Cophenetic correlation for Chebyshev distance and complete linkage is 0.6326026537632393. Cophenetic correlation for Chebyshev distance and average linkage is 0.717763178117288. Cophenetic correlation for Chebyshev distance and weighted linkage is 0.5648124256800041. Cophenetic correlation for Mahalanobis distance and single linkage is 0.6325008742275654. Cophenetic correlation for Mahalanobis distance and complete linkage is 0.5998379141946822. Cophenetic correlation for Mahalanobis distance and average linkage is 0.7415915516679394. Cophenetic correlation for Mahalanobis distance and weighted linkage is 0.6512605002990925. Cophenetic correlation for Cityblock distance and single linkage is 0.6477024162147822. Cophenetic correlation for Cityblock distance and complete linkage is 0.7190677446354251. Cophenetic correlation for Cityblock distance and average linkage is 0.779045948953341. Cophenetic correlation for Cityblock distance and weighted linkage is 0.6019581001739139.

In [29]:

# printing the combination of distance metric and linkage method with the highest cophenetic correlation

print(

"Highest cophenetic correlation is {}, which is obtained with {} distance and {} linkage.".format(

high_cophenet_corr, high_dm_lm[0].capitalize(), high_dm_lm[1]

)

)

Highest cophenetic correlation is 0.779045948953341, which is obtained with Cityblock distance and average linkage.

Let’s explore different linkage methods with Euclidean distance only.

In [30]:

# list of linkage methods

linkage_methods = ["single", "complete", "average", "centroid", "ward", "weighted"]

high_cophenet_corr = 0

high_dm_lm = [0, 0]

for lm in linkage_methods:

Z = linkage(subset_scaled_df, metric="euclidean", method=lm)

c, coph_dists = cophenet(Z, pdist(subset_scaled_df))

print("Cophenetic correlation for {} linkage is {}.".format(lm, c))

if high_cophenet_corr < c:

high_cophenet_corr = c

high_dm_lm[0] = "euclidean"

high_dm_lm[1] = lm

Cophenetic correlation for single linkage is 0.6391818886918261. Cophenetic correlation for complete linkage is 0.7162924652763346. Cophenetic correlation for average linkage is 0.7664519011885238. Cophenetic correlation for centroid linkage is 0.7560310240212. Cophenetic correlation for ward linkage is 0.5416485654617125. Cophenetic correlation for weighted linkage is 0.6047194146480928.

In [31]:

# printing the combination of distance metric and linkage method with the highest cophenetic correlation

print(

"Highest cophenetic correlation is {}, which is obtained with {} linkage.".format(

high_cophenet_corr, high_dm_lm[1]

)

)

Highest cophenetic correlation is 0.7664519011885238, which is obtained with average linkage.

Observations

- We see that the cophenetic correlation is maximum with cityblock distance, which is the Manhattan distance, and average linkage.

- The cophenetic correlation with Euclidean distance and average linkage is slightly less than the combination with maximum cophenetic correlation.

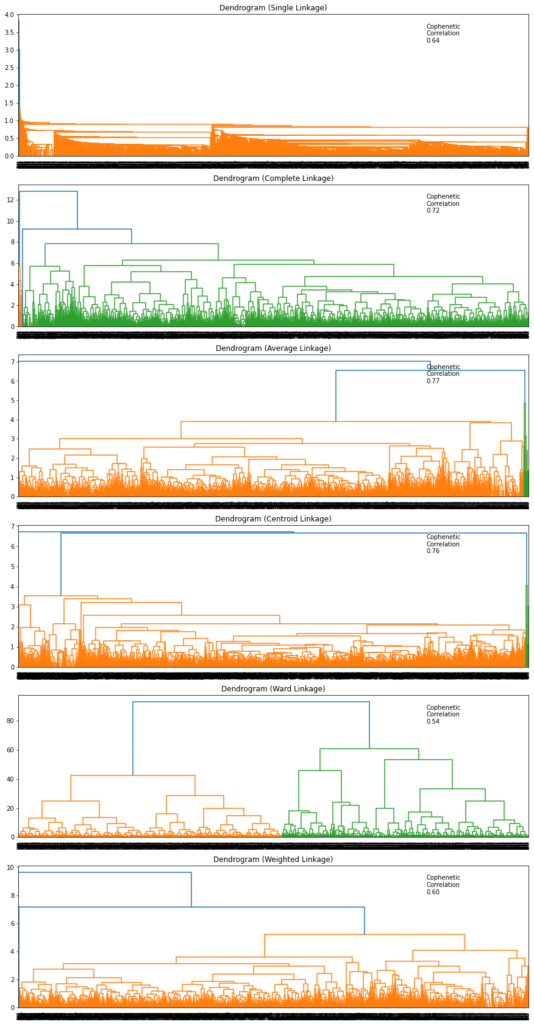

Let’s see the dendrograms for the different linkage methods.

In [32]:

# list of linkage methods

linkage_methods = ["single", "complete", "average", "centroid", "ward", "weighted"]

# lists to save results of cophenetic correlation calculation

compare_cols = ["Linkage", "Cophenetic Coefficient"]

compare = []

# to create a subplot image

fig, axs = plt.subplots(len(linkage_methods), 1, figsize=(15, 30))

# We will enumerate through the list of linkage methods above

# For each linkage method, we will plot the dendrogram and calculate the cophenetic correlation

for i, method in enumerate(linkage_methods):

Z = linkage(subset_scaled_df, metric="euclidean", method=method)

dendrogram(Z, ax=axs[i])

axs[i].set_title(f"Dendrogram ({method.capitalize()} Linkage)")

coph_corr, coph_dist = cophenet(Z, pdist(subset_scaled_df))

axs[i].annotate(

f"Cophenetic\nCorrelation\n{coph_corr:0.2f}",

(0.80, 0.80),

xycoords="axes fraction",

)

compare.append([method, coph_corr])

Observations

- Dendrogram for Ward linkage shows distinct and separate clusters.

In [33]:

# let's create a dataframe to compare cophenetic correlations for each linkage method df_cc = pd.DataFrame(compare, columns=compare_cols) df_cc

Out[33]:

| Linkage | Cophenetic Coefficient | |

|---|---|---|

| 0 | single | 0.639182 |

| 1 | complete | 0.716292 |

| 2 | average | 0.766452 |

| 3 | centroid | 0.756031 |

| 4 | ward | 0.541649 |

| 5 | weighted | 0.604719 |

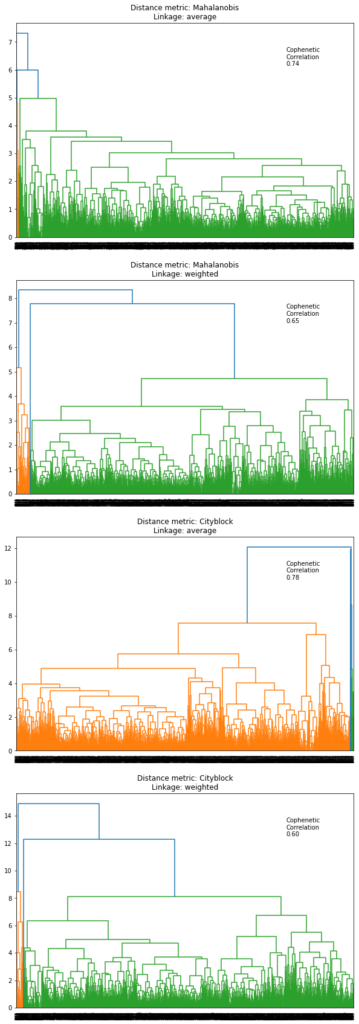

Let’s see the dendrogram for Mahalanobis and Manhattan distances with average and weighted linkage methods (as they gave high cophenetic correlation values).

In [34]:

# list of distance metrics

distance_metrics = ["mahalanobis", "cityblock"]

# list of linkage methods

linkage_methods = ["average", "weighted"]

# to create a subplot image

fig, axs = plt.subplots(

len(distance_metrics) + len(distance_metrics), 1, figsize=(10, 30)

)

i = 0

for dm in distance_metrics:

for lm in linkage_methods:

Z = linkage(subset_scaled_df, metric=dm, method=lm)

dendrogram(Z, ax=axs[i])

axs[i].set_title("Distance metric: {}\nLinkage: {}".format(dm.capitalize(), lm))

coph_corr, coph_dist = cophenet(Z, pdist(subset_scaled_df))

axs[i].annotate(

f"Cophenetic\nCorrelation\n{coph_corr:0.2f}",

(0.80, 0.80),

xycoords="axes fraction",

)

i += 1

Observations

- Out of all the dendrograms we saw, it is clear that the dendrogram with Ward linkage gave us separate and distinct clusters.

- 3 would be the appropriate number of the clusters from the dendrogram with Ward linkage method.

Let’s create 3 clusters.

In [35]:

HCmodel = AgglomerativeClustering(n_clusters=3, affinity="euclidean", linkage="ward") HCmodel.fit(subset_scaled_df)

Out[35]:

AgglomerativeClustering(n_clusters=3)

In [36]:

# adding hierarchical cluster labels to the original and scaled dataframes subset_scaled_df["HC_Clusters"] = HCmodel.labels_ df["HC_Clusters"] = HCmodel.labels_

Cluster Profiling

In [37]:

cluster_profile = df.groupby("HC_Clusters").mean()

In [38]:

cluster_profile["count_in_each_segments"] = (

df.groupby("HC_Clusters")["Sale_Price"].count().values

)

In [39]:

# let's display cluster profiles cluster_profile.style.highlight_max(color="lightgreen", axis=0)

Out[39]:

| Listing_Price | Sale_Price | Discount | Rating | Reviews | count_in_each_segments | |

|---|---|---|---|---|---|---|

| HC_Clusters | ||||||

| 0 | 7713.665285 | 7207.118135 | 0.891192 | 3.011295 | 30.126425 | 965 |

| 1 | 6188.557740 | 3358.597666 | 45.503686 | 3.347604 | 48.585995 | 1628 |

| 2 | 15138.686380 | 11523.822581 | 23.028674 | 3.579570 | 42.704301 | 558 |

In [40]:

fig, axes = plt.subplots(1, 5, figsize=(16, 6))

fig.suptitle("Boxplot of scaled numerical variables for each cluster", fontsize=20)

counter = 0

for ii in range(5):

sns.boxplot(

ax=axes[ii],

y=subset_scaled_df[num_col[counter]],

x=subset_scaled_df["HC_Clusters"],

)

counter = counter + 1

fig.tight_layout(pad=2.0)

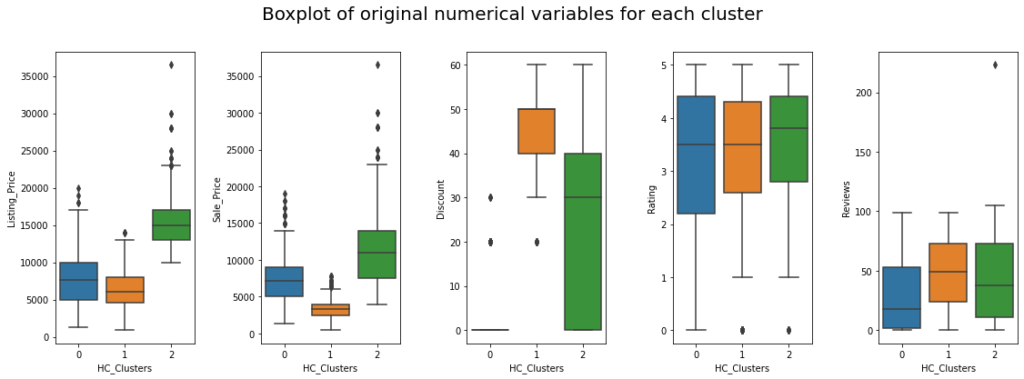

In [41]:

fig, axes = plt.subplots(1, 5, figsize=(16, 6))

fig.suptitle("Boxplot of original numerical variables for each cluster", fontsize=20)

counter = 0

for ii in range(5):

sns.boxplot(ax=axes[ii], y=df[num_col[counter]], x=df["HC_Clusters"])

counter = counter + 1

fig.tight_layout(pad=2.0)

Insights

- Cluster 0:

- This cluster contains medium-priced products, with a median listing price of approximately 7500.

- Almost no discount is given to products in these clusters.

- The median rating of this cluster is around 3.5, suggesting that customers are satisfied with 50% of the products in this cluster.

- Products in these clusters have got lesser reviews, which can be because these products are very expensive and fewer people buy them.

- Cluster 1:

- Listing price is low for products in this cluster, and the sale price is lower, as there are heavier discounts on these products.

- Almost all the products have discounts between 30% to 40%.

- 50% of products have a rating from 3.5 to 5 which indicates that customer satisfaction varies across products in this cluster.

- On average, 50 reviews are given to products in this cluster.

- Cluster 2:

- This cluster contains more expensive premium products, with a median listing price of approximately 15000 and a median sale price of 11000.

- The discount for products in this cluster varies from 0% to 60%.

- Rating is very high.

Let’s compare Cluster vs Brand

In [42]:

pd.crosstab(df.HC_Clusters, df.Brand).style.highlight_max(color="lightgreen", axis=0)

Out[42]:

| Brand | Adidas Adidas ORIGINALS | Adidas CORE / NEO | Adidas ORIGINALS | Adidas SPORT PERFORMANCE | Nike |

|---|---|---|---|---|---|

| HC_Clusters | |||||

| 0 | 0 | 249 | 214 | 115 | 387 |

| 1 | 0 | 861 | 469 | 298 | 0 |

| 2 | 1 | 1 | 223 | 193 | 140 |

Observations

- Adidas gives more discounts on their products, while Nike barely gives any.

- Nearly one-third of Nike products are premium in nature.

Business Recommendations

- Cluster 2 consists of premium products, so focused marketing campaigns can be done to target customers with higher purchasing power.

- Cluster 2 products can also be made available in showrooms situated in premium locations because people generally tend to buy premium products from showrooms.

- Cluster 1 consists of products that are sold at higher discounts, so these might be the ones that are introduced in large quantity during festive sales (like Christmas, Black Friday, New Year, etc). This strategy can be continued with high inventory.

- Cluster 0 consists of products with comparatively low and medium prices with almost no discount, so general marketing campaigns can be done for these to target the general public.